The last post in this series ends by saying that the world of academic research has a lot to offer in terms of innovative approaches to adaptation that can improve accessibility for people with various impairments—either due to disability or situation—and even multiple impairments at the same time.

But how can conflicting requirements for very large fonts and touch-target areas on a small-screen device be realised? How can the application fit into these seemingly irreconcilable constraints?

The SUPPLE project lead by Krzysztof Gajos is a prime example, and has produced algorithms that can automatically generate user interfaces from more abstract specifications (i.e. the types of data presented to the user, or needs from the user). For example, if a numerical input is required, this might be expressed using a textbox in a traditional desktop app, or a spinner control with a sensible default value on a mobile where keyboard entry is more time-consuming. Buttons on mobile may be larger, with less spacing between them, to make the touch targets larger.

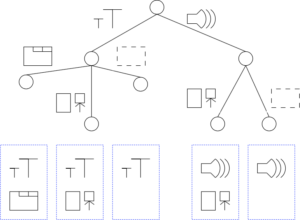

But, there is much more to it than this: because the UI/content is specified in the abstract, its structure can be adapted where necessary. If all of the controls in a settings pane won’t fit onto the small screen of a mobile phone, then they can be “folded” into a tab panel, with related controls on separate tab panels, and with the most commonly-accessed controls on the first panel. Further: the font size (and other graphical properties) of the controls can be adjusted too. If making the font size larger means some controls won’t fit on the screen, scrolling or the aforementioned folding (or some combination of the two, depending on which would be easiest for this individual user to navigate) can be used to re-arrange them.

This allows content and interfaces to adapt to the user, regardless of the constraints imposed upon them by situational barriers (e.g. they’re using a phone rather than a full computer) or impairments (e.g. vision or motor difficulties). It’s also important to note that people’s preferences are taken into consideration, too—if you prefer tab panels to scrolling, or sliders to entering numbers, then those controls will be used when possible. If such an approach were adopted for apps and web content, we could have just the adaptations we need, without the compromises of assistive technologies that mimic physical interventions such as magnifiers.

Because of the abstract way in which interfaces are specified, one also wonders if they could be adapted for voice/conversational interaction, too—it would not take much additional effort to support such platforms…

How do we get there?

First of all, it would be great if platform developers and device manufacturers integrated novel approaches such as these into their systems. More than this, though, some common means to describe users’ needs is also required, that will work ubiquitously. Projects such as the Global Public Inclusive Infrastructure (GPII) aim to achieve this by having a standard set of user preferences that live in the cloud and can be served up to devices from ATMs to computers and TVs. When such devices adopt techniques, such as those above, to help them adapt, then services such as GPII could help deliver the information about people, their preferences and capabilities, that would support that adaptation.

There’s one final barrier that has only been touched upon above, and that is the lack of awareness people have of their own accessibility needs and the support that is already (or could be, as above) on offer to support them. Many don’t identify with the term “accessibility” at all. Also, even if they had a set of preferences established, when starting to use a totally new device for the first time, those preferences may not translate fully to the new environment (multi-touch gestures aren’t usually applied to an ATM, and keyboards are not usually attached to phones). Therefore, it seems natural to work out what adaptations a user needs based on their capabilities as a human—their visual acuity, various measures of dexterity and so on. If we could get even a rough idea of these factors, then devices/environments can be set up for someone in an accessible manner, before they have even used them.

Summary

Current assistive technologies have been and remain instrumental in assuring our independence. However, we can make such technologies more relevant to the mainstream by capitalising upon current trends, and by learning from novel techniques developed by research projects.

We will be visiting some more novel and interesting assistive technologies in forthcoming posts.